AI systems rely on machine learning, a process that uses data collected from the real world to train systems by optimizing their parameters. The data collected to train an AI system represents the world at a snapshot in time, the time during which the data was collected.

This makes an AI system vulnerable to changes in its operational environment. For example, if a self-driving car finds itself navigating in an area with traffic signs that are not represented in its training data, it will fail to operate the car correctly.

Drift is a term AI engineers use to refer to this degradation in machine learning system performance due to changes in the operational environment. Everyone agrees that drift is a problem, and articles about drift talk about things like data drift, concept drift, model drift, and covariance shift. These terms for different types of drift are not really standardized, and they can overlap and be somewhat confusing.

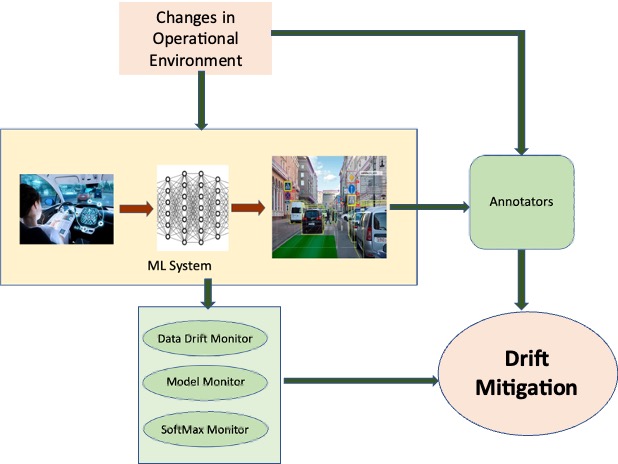

In my iMerit blog article Staying Ahead of Drift in Machine Learning Systems I clarify drift by putting it in the context of the three basic components of a machine learning system: feature extraction, model encoding, and output decoding. I illustrate the types of drift with a simplified example, and show how to modify machine learning systems to remedy drift. Finally, I describe a comprehensive approach to detecting and mitigating drift, as summarized in the figure below from the article.