Artificial intelligence gets its name from the fact that AIs perform tasks associated with human intelligence, such as recognizing faces or understanding language or playing chess. For these tasks, we can measure AI performance and compare it to human performance, using a single ‘yardstick’, such as accuracy or word error rate or games won.

But can artificial intelligence and human intelligence be compared in a general way, using a single yardstick? Is there a general intelligence scale upon which, for example, humans might average 500, today’s best AIs 275, and future superintelligent AIs 1000?

Of course, it is difficult to measure even human intelligence on a single scale. It is generally acknowledged that measures like IQ tests, while useful as predictors of particular capabilities, do not capture the breadth of human intelligence.

However, putting aside the fundamental difficulty of quantifying intelligence, human or otherwise, can we compare human and artificial intelligence, beyond performance on specific tasks? Should we talk about humans being smarter than AIs, or vice versa? I would say ‘No’. Today human and artificial intelligence are so different that it doesn’t make sense to try to compare them along a single scale.

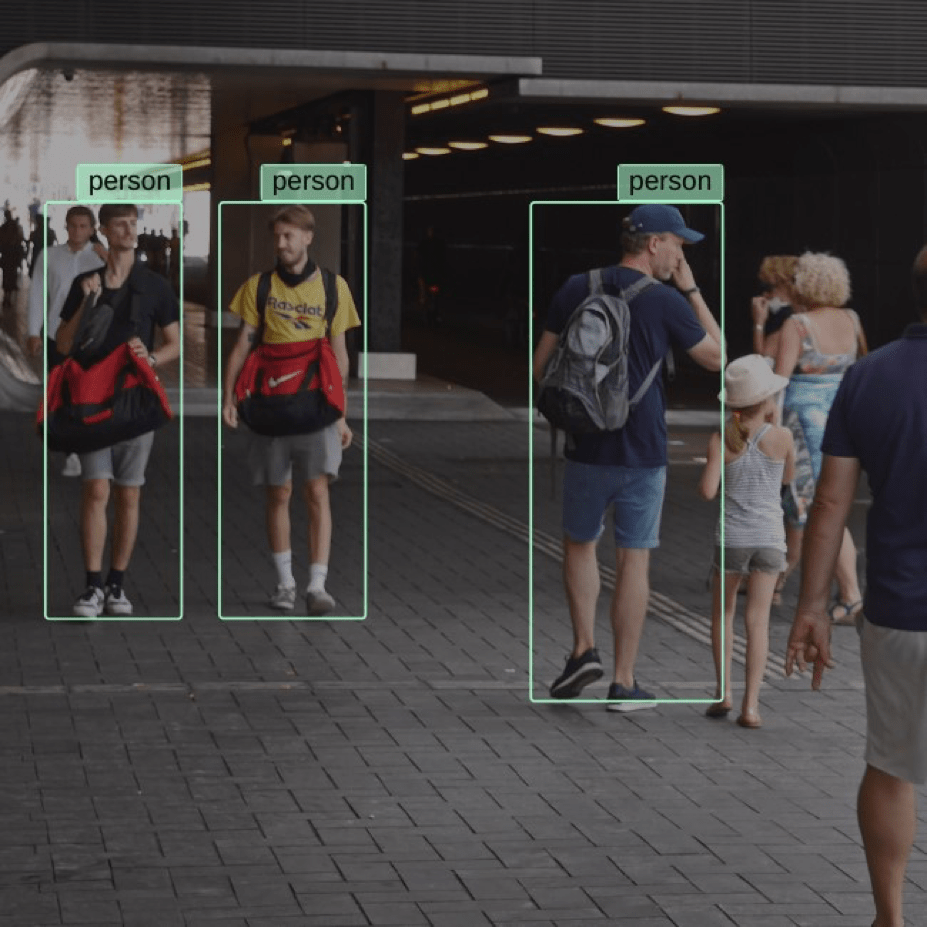

One striking difference between AIs and humans shows up in the way deep neural networks work. These networks, at the heart of today’s most advanced AIs, learn patterns from huge masses of data, and use these patterns to ‘understand’ things like visual images or language. However the way these networks ‘perceive’, ‘learn’, and ‘understand’ the world is decidedly non-human.

Let’s consider machine translation as an example. First, a little history. In 1954 an IBM 701 computer was programmed with a dictionary and rules that allowed translation of Russian sentences into English. The results were so encouraging that it was predicted that the problem of automatic machine translation would be completely solved in 3 to 5 years.

However in the next 10 years little progress was made. Research in machine translation came to be considered such a long shot that funding was drastically curtailed. Critics at the time pointed out that human translation requires complex cognitive processing that would be extremely difficult or impossible to program into computers. When humans interpret language, we don’t just hear it as sounds or or see it as symbols, we understand it as objects, actions, ideas, and relationships, which is key to our understanding language.

In the next decades, researchers in machine translation tried to get closer to human understanding by developing complex models of linguistic structure and meaning. While this enabled machine translators to gradually improve, human translators performed much better.

In more recent years deep neural networks began to be applied to machine translation, greatly improving performance. By 2016, Google announced the GNMT system, a deep neural network that reduced translation errors by 60% compared to previous methods.

How did GNMT achieve this quantum jump in performance? Did Google engineers finally figure out how to program the kind of understanding into their computers that humans need to make good translations?

The answer to this last question is: “No, quite the opposite!” The designers of GNMT did away with any attempt to incorporate human-like knowledge. GNMT relies on none of the complex models of language structure and meaning used by previous methods.

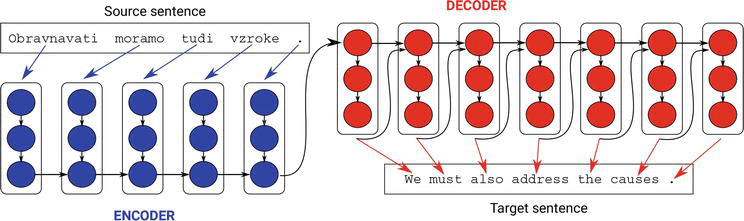

Instead, GNMT uses a type of neural network called Long Short-Term Memory (LSTM). Basically, LSTM [Note 1] allows sequences of output numbers (translated sentences) to be calculated from sequences of input numbers (sentences to be translated). The calculations in GNMT are controlled by hundreds of millions of parameters. Millions of examples are used to set these parameters, through a trial-and-error adjustment procedure.

As an illustration of how different a deep neural network translator is from a human translator, consider how such a system typically represents a word to be translated. A translator with a 10,000 word vocabulary, for example, might represent each word by string of 10,000 ones and zeroes, with a one corresponding to the word’s position in the vocabulary list, and all the rest of the string zeroes. This way of representing words is called ‘one-hot’ encoding [Note 2]. Experimentation has shown it works well with deep neural networks doing language processing [Note 3].

For example, if the word ‘elephant’ is the 2897th word in the translator’s vocabulary, what the machine translator ‘sees’ when presented with the word is a string with 9,999 zeroes, with a single one in position 2897 of the string. All it ‘knows’ about ‘elephant’ at this point, is that it is word number 2897 in its vocabulary.

Contrast this with a human translator, who probably remembers many things about elephants, as soon as the word is encountered.

The power of a deep neural network comes from its ability to find patterns in word occurrence by analyzing millions of translated documents. Machine translation has always used patterns of word occurrence, for example, which words are more likely to follow other words. However deep neural networks take this to a whole new level, recognizing extremely complex patterns that link and relate many words to many other words.

That these huge deep neural network translators can be built and perform so well is a tribute to years of creative engineering and systematic experimentation in AI. Decades ago, no one really knew that it would be possible to train a network with hundreds of parameters, let alone hundreds of millions. And it was equally unexpected that good machine translation could be done by using only patterns of word occurrence, without any reference to word meaning.

GNMT is an engineering marvel, to be sure. However, its mechanistic translation incorporates nothing about what words refer to in the real world. It ‘knows’ the Japanese sentence ‘Watashi no kuruma wa doko desu ka? translates to ‘Where is my car?’, but it has no idea what a car is (other than the words ‘car’ associates with) or that the question refers to a location on the planet earth that the questioner is likely to walk to.

Today, we compare machine and human translation, and the machines are looking very good. But what does this tell us about how artificial and human intelligence compare? Is this an example of AI catching up to human intelligence? No, it is only machine translation catching up to human translation.

Note 1: Four years is a long time in AI, and further progress has been made since GNMT. Transformer architectures have replaced LSTM as the architecture of choice for many applications in language processing. The evolution from LSTM to Transformer is an example of a fascinating aspect of AI deep learning progress: simpler architectures often perform better, when more compute power becomes available. GNMT’s LSTM is an example of a ‘bi-directional recurrent neural network with memory states and attention’, which is as complicated as it sounds – sentences are sequentially processed through a neural network that updates states that represent how words depend on other words that come before and after, and how far ahead or backwards words make a difference. Transformers do away with a lot of that, and just take in whole sentences at once.

Note 2: Generally, encoding just means transforming one representation to another, according to a set of rules, like converting ‘elephant’ to a string of ones and zeroes. Encoding is used in a couple of other senses in GNMT. The diagram at the start of this blog shows that an LSTM-type neural network can be divided into a front-end encoder and a back-end decoder. The encoder and decoder in this case describe mapping from the input language to the neural network’s internal representation (encoding), and mapping from the internal representation to the output language (decoding). These mappings are what the neural network learns by training on millions of examples. Also note that language translation itself is a form of encoding – a transformation of the input language to the output language.

Note 3: Although one-hot encoding is frequently used in LSTM neural networks, GNMT actually uses a more sophisticated technique that encodes word segments instead of complete words. The network learns to break up words in ways that maximize its ability to make good guesses for word translations outside its vocabulary.